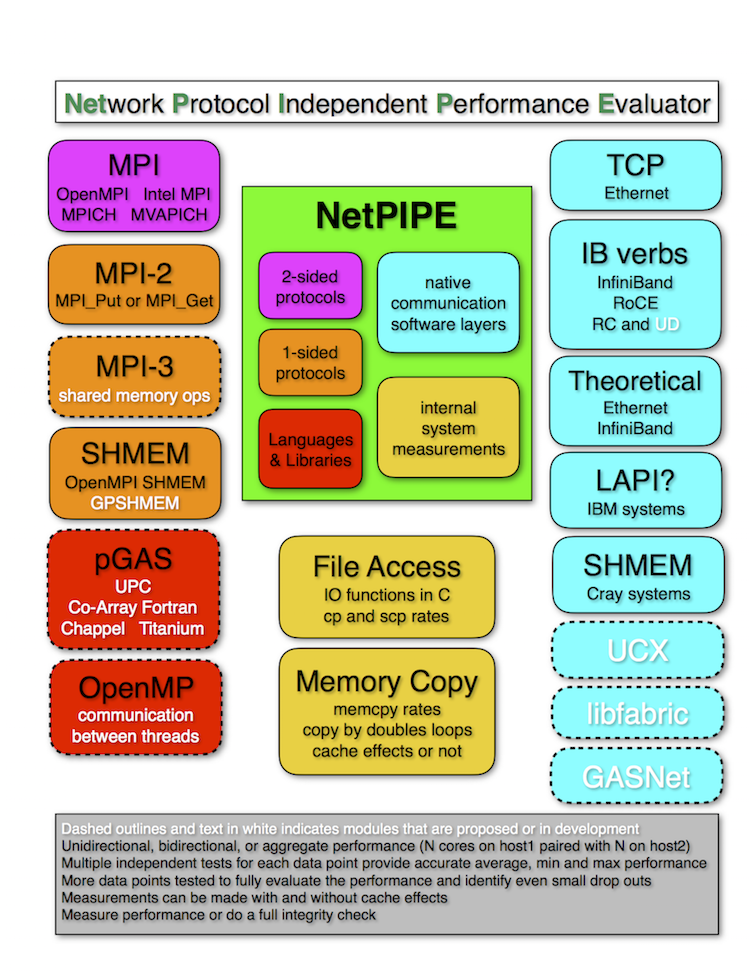

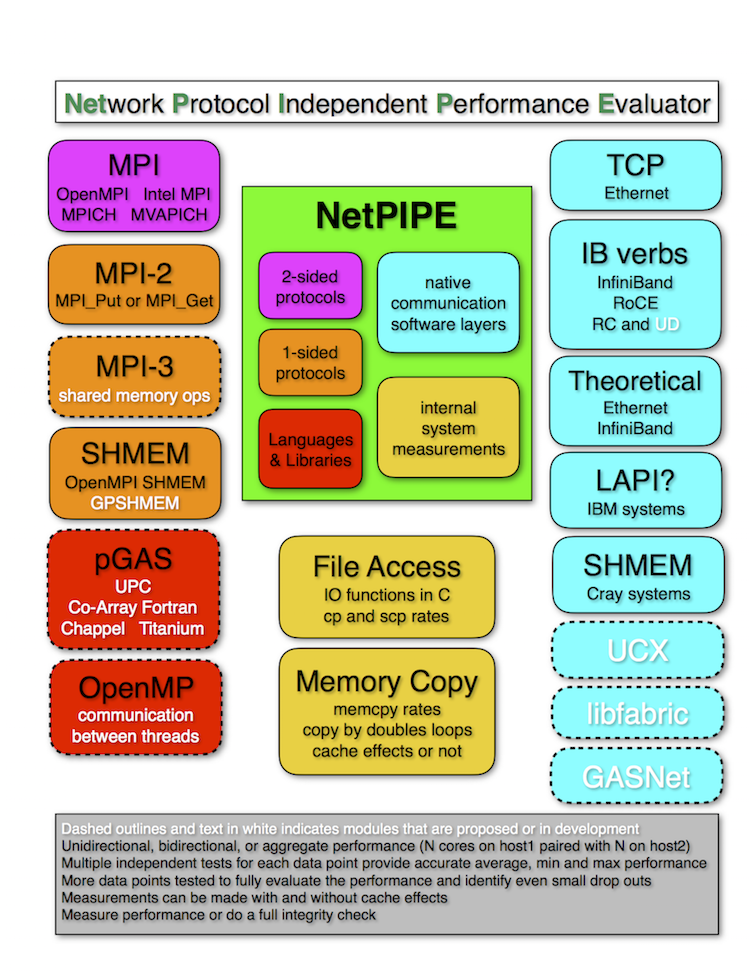

NetPIPE is a protocol independent communication performance benchmark that visually represents the network performance under a variety of conditions. It performs simple ping-pong tests, bouncing messages of increasing size between two processes, whether across a network or within an SMP system. Message sizes are chosen at regular intervals and with slight perturbations to provide a complete test of the communication system. Each data point involves many ping-pong tests to provide an accurate timing. Latencies are calculated by dividing the round trip time in half for small messages ( < 64 Bytes ).

It was originally developed at Ames Laboratory / Iowa State University to test MPI and TCP by Quinn Snell, Armin Mikler, John Gustafson, and Guy Helmer. From October 2000 to July 2005 the code was greatly expanded and maintained by Dave Turner at Ames Laboratory with contributions from several past students and employees (Troy Benjegerdes, Xuehua Chen, Adam Oline, Bogdan Vasiliu, and Brian Smith). Much of the core was rewritten, and modules were developed to allow tests on message-passing layers like PVM, TCGMSG, MPI-2, SHMEM, as well as native software layers like GM, InfiniBand VAPI, ARMCI, LAPI, and Cray SHMEM/GPSHMEM.

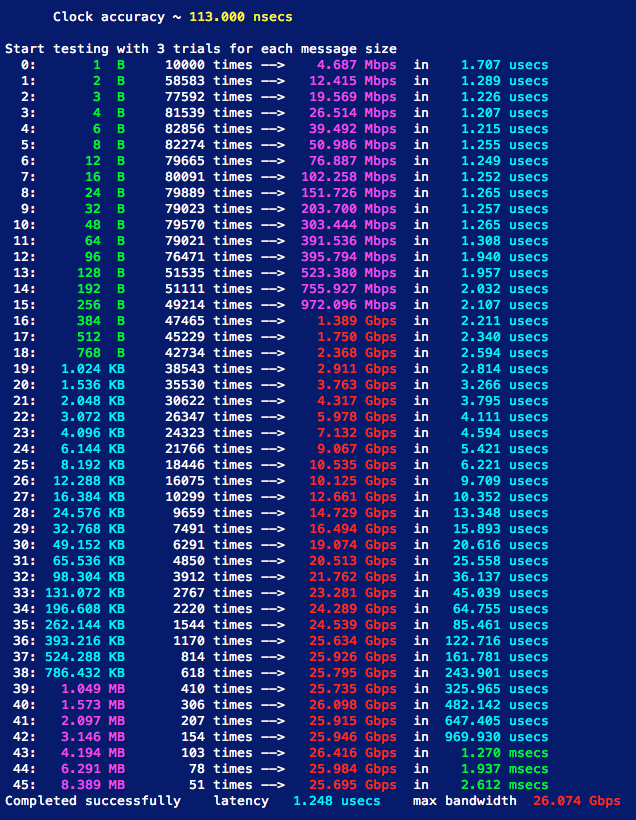

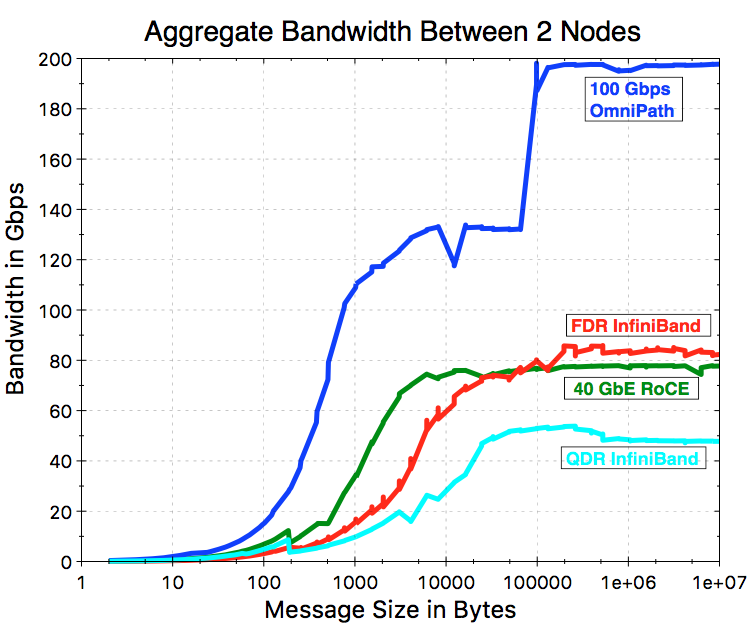

NetPIPE is currently being developed by Dave Turner at Kansas State University. Recent research has focused on expanding the capabilities of NetPIPE beyond simple point-to-point measurements so that it now can measure aggregate performance across a network link or switch by doing multiple simultaneous pairwise ping-pong tests. Modules have also been added to allow direct testing at the InfiniBand verbs layer, memory copy performance, IO performance of various output functions in C, and a theoretical module to allow users to compare to the theoretical performance expected from a network.

NetPIPE uses simple ping-pong tests to evaluate the communication performance of message-passing layers like MPI, MPI-3 shared memory, MP_Lite, PVM as well as one-sided functions like MPI get and put, SHMEM and GPSHMEM, and ARMCI. If I get funding to continue this research I'd like to develop modules for testing pGAS languages like UPC, Titanium, and Co-Array Fortran.

The performance on many native communication layers can also be directly tested such as TCP, InfiniBand verbs (RC only, UD in development), and LAPI. If I get funding, or someone volunteers to contribute modules, I'd like NetPIPE to be able to measure performance for UCX, libfabric, portals, and GasNET.

The OSU benchmark suite provides a broad range of ping-pong tests to measure latency, unidirectional, bidirectional performance. NetPIPE goes beyond testing the factors of 2 in testing many trials including perturbations of +/- 3 bytes on either side of each data point. This more stringent testing is necessary to completely evaluate many network systems and identify drop outs that would otherwise go un-noticed. NetPIPE also provides the ability to do aggreage bandwidth tests using multiple pairwise bi-directional ping-pong tests across the same network link to test the aggregate bandwidth you can get out of a compute node, across a given network link, or to test switch saturation. Aggregate bandwidth measurements put the most stress on each compute node and represent the conditions that applications will put on the network capabilities. These measurements have proven vital to properly tuning today's 100 Gbps networks for optimal performance.

Download NetPIPE-5.1.4.tar.gz

git clone https://gitlab.beocat.ksu.edu/daveturner/netpipe-5.x.git

Compiling and running NetPIPE is very quick and easy. See the README file for more complete directions. All modules that use more than one process require MPI which handles the synchronization, broadcast, and gather functions as well as easing setup.

make mpi

mpirun -np 2 --hostfile np.hosts NPmpi -o

np.mpi.qdr

where np.hosts for OpenMPI is:

host1 slots=1

host2 slots=1

make mpi

mpirun -np 64 --hostfile np.hosts NPmpi --async

--bidir -o np.mpi.aggregate

where np.hosts for OpenMPI is:

host1 slots=32

host2 slots=32

NetPIPE: Network Protocol-Independent Performance Evaluater

Quinn Snell, Armin Mikler, and John Gustafson

Protocol-Dependent Message-Passing Performance on Linux Clusters

Dave Turner and Xuehua Chen,

Proceedings of the IEEE International Conference on Cluster Computing (Cluster 2002),

Chicago, Illinois, September 23-26, 2002.

Integrating New Capabilities into NetPIPE

Dave Turner, Adam Oline, Xuehua Chen, and Troy Benjegerdes,

Recent Advances in Parallel Virtual Machine and Message Passing Interface,

10th European PVM/MPI conference, Venice, Italy, pg 37-44 (October 2003).